What are Textual Inversions and what do they do in Stable Diffusion?

Overview

Textual Inversions are small AI models. These “TIs” can strongly change the results from a base model, giving you a better visual output. Think of a TI as a very strong magnifying glass. They can be trained to zero in on what’s good.

Textual Inversions similar to LoRAs, but smaller and more limited. We use them because they are extremely good at two things: negatives, and saving tokens*. They can also be used to render focused art styles and character likenesses, but LoRAs is much more commonly used for this.

*Tokens are the amount of understood words in your prompt. The golden number is to stay under 75, so not having to type ghastly things like [mutated hands, five fingers, etc] saves you both time and tokens, and images just look better. This is the main reason we love TIs and why you should study them, too.

Positive and Negative TIs, and how to tell them apart

The Stable Diffusion community has been very good about giving Textual Inversions appropriate names to differentiate positive and negative TIs. If the name sounds negative in nature, like “Bad Hands” or “Very Bad” or “Absolutely Horrible” you can probably guess that the trigger tag, the word that activates the effect, must be placed in your negative prompt.

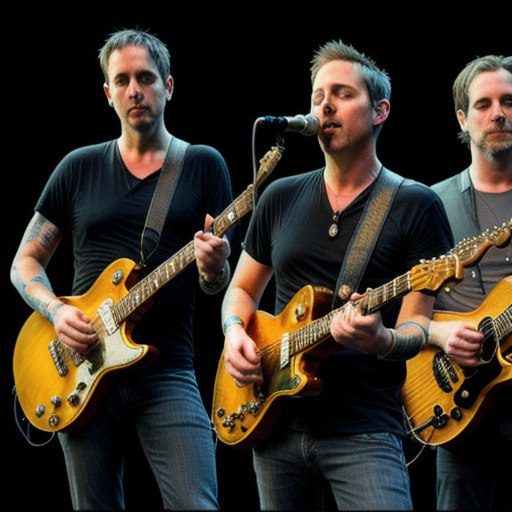

Let’s first create an image without a negative to compare:

/render /seed:123 /guidance:8 /sampler:upms /steps:50 /images:1 toad the wet sprocket <realvis51>

See how fuzzy the image is? They barely look human. Let’s fix it with a TI.

Pro members: You can also add /size:768×768 for even more pleasing results.

To use a negative TI, they should be placed within [[ ]] or it will load it as a positive, and your image will look much worse. Copy this example:

/render /seed:123 /guidance:8 /sampler:upms /steps:50 /images:1 toad the wet sprocket [[<verybad-negative:-2>]] <realvis51>

Much better! All the guys are in focus, and nobody is covered in peach fuzz.

But there’s still room for improvement: we can add positive TIs to make characters look more accurate, add LoRAs, and stack multiple negative TIs (fix the hands!) to make this image shine, and then follow through with /highdef and /facelift to make it really pop.

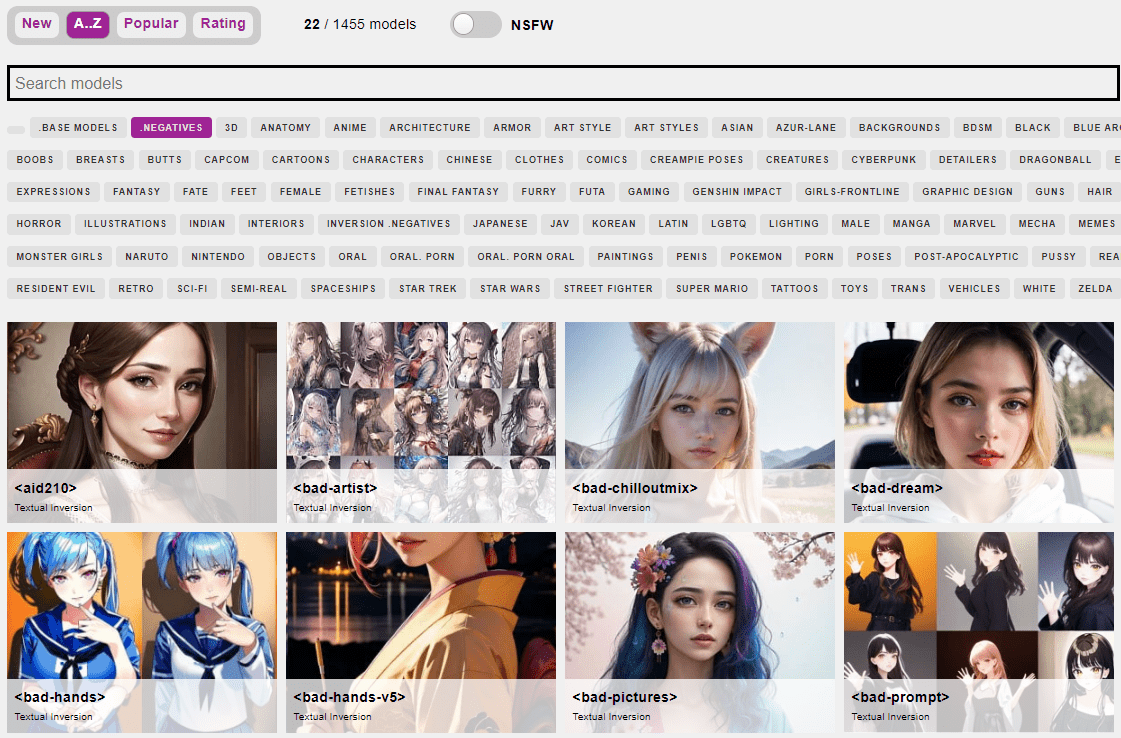

When using Graydient software, you’ll find the negatives conveniently located next to the base models at the start of our concepts system, as shown here:

The more obscure ones like “aid210” have helpful sounding names. If you’re ever not sure, just click into one to read the description and get an example.

Base Model Specific Negatives also exist

Pictured above: This is the promotional photo for “Bad ChilloutMix”, which makes a crisp photo like this possible without having to type a long prompt when combined with the Chillout Mix base model. The TI used in a prompt would look something like this:

/render A beautiful woman with cat ears [[<bad-chilloutmix:-2>]]<chilloutmix>

Translation: I’m still prompting, I’m still calling the <chilloutmix> base model, but in my negative prompt I’ve added the negative TI, and a negative weight. Here, we are saying “attention: every bad result common in chilloutmix, by negative 2X”, negating bad results.

Whether or not model-specific TIs work better than other negatives is hard to say, but they’re a good place to start. We suggest adding more. Most people stack 4-5 different TIs that they trust and stick with those for specific art styles.

Two final words of advice

1) Don’t over do it, but do experiment

Some TIs are specifically built for illustrations and will make your realistic images look much worse, so adding all of them without understanding what they do is not a good idea, like combining <bad-artist-anime> with <realvis51>. This will unpredictable results. Experiment to find the sweet spot for your style. Lock the seed using the prompt below to find your best style.

Test using /images:1 /seed:123 /steps:35 /sampler:upms in your prompt so you can make quick comparisons. This returns one fast consistent image.

2) Don’t duplicate TIs or their trigger tokens

Your prompt will crash if the tokens for textual inversion are repeated. So if you prompt [bad hands] and [[<bad-hands:-1.5>]] and use a recipe like #boost which also has bad hands, it will try to load that TI 3 times and you’ll get something awful. Built slowly and make sure you fully understand what recipes contain. This is an easy mistake to make when copying/pasting from your favorite prompts. We’ll add some helpful alert messages in our software to give you a heads up if you make this mistake, in a future update.

FAQs

Do textual inversions work /parser:new?

Partially. At the moment, they respond to either weight -2 and -1. The syntax is the same. Here’s an example of TIs at work with /parser:new

For the uninitiated, /parser:new is a mode that turns on per-token weights for more granular controls, like (high quality:1.4). These weights are otherwise ignored, as our default parser prefers ((high quality)) instead.

Try this amazing prompt, provided by Squibduck, as shown above:

/render /parser:new /seed:487322 /sampler:ddim /steps:35 /guidance:6 /images:9 /size:768x960 /clipskip:2 detailed, solo, 1girl, pov, platinum blonde ponytails, cloak, glittering Chainmail, adult Zelda (beautiful and aesthetic:1.2), (masterpiece, best quality, ultra-detailed), (best quality, high quality, absurdres, masterpiece), (best quality, high quality:1.4), dynamic, highly detailed, ✨ Dof, bokeh, cinematic camera <masterpiece:0.5> <detail-make:0.6> [[[<easy-negative:-2> <deep-negative:-2> simple backround, blank backround, no backround, unshaven, hairy, obese, beads,(worst quality, low quality:1.5)]]] <photon>

Difference between Textual Inversions and Loras

They’re both models. LoRAs are larger and interrupt the diffusion process at a different place, at more layers of the process. LoRAs are larger files and are better suited for training images of art styles and people’s faces.

How is it different from a recipe called “easy negative”?

Recipes are just text, created by the community and you can also create your own to save time. It’s similar to a prompt. A recipe can contain a trigger to a Textual Inversion. So if you have a bunch of favorite TIs, you can make a unique recipe to fire all of them in one shot, to save yourself the typing.

We do this because it’s much easier to type #hashtags than [[<easy-negative:-1.5>]] [mutated hands, three legs, six fingers, dehydrated, etc]